In order for AI to tackle real-world problems and demonstrate generalization, we need to build “understanding” over them. We work and ground our approaches with real-life data in explainable ways.

Our world is characterised by an enormous visual variability and diversity. Yet, humans have an incredible ability to not only focus on the relevant and useful information but also to translate it to high-level concepts necessary for reasoning and decision making.

Our Vision and Language team focuses on building state of the art systems that learn to fuse visual information with linguistic common sense in order to achieve human-level/inspired understanding of visual scenes. We aim on learning to accurately recognise how objects interact, what is the common sense aspect behind these interactions, answer questions on images in an explainable manner as well as to provide correct reasoning explanations.

The Team

The Team builds on our relations with academic partners, and consists of long/short term research trainees as well as diploma theses trainees. Our collaborations are built across several technical universities and departments (e.g. National Tech. Univ. of Athens, Univ. of Patras).

Vassilis Pitsikalis

Group Lead

Markos Diomataris

Project Supervision

Manos Zaranis

Mary Parelli

Alexandros Benetatos

Zacharias Anastasakis

Orestis Papanikolaou

Danai Brilli

Projects

Learning the Spatial Common Sense of Relations

Due to imbalanced training data, scene graph generation models have the tendency to predict relations only based on what objects interact and not on how. As an example, a person will be predicted to sit on a chair no matter their spatial positioning. Our method mitigates this important flaw by enforcing grounding consistency between two inverse problems namely relationship detection and grounding.

Weakly Supervised Visual Relationship Detection

Similarly to humans, high-level representations of images as relation graphs should be able to distinguish between salient and non-important information in an image. Nevertheless, current datasets do not provide this information. We tackle this important challenge using image-caption pairs as weak annotations for learning dense object relations as well as their importance.

Recognising Unseen Relations using External Knowledge

Datasets providing relation annotations span only a small subset of plausible relation combinations. For example an elephant wearing glasses is almost unlikely to be seen during model training but human common sense dictates that it is certainly plausible. How can we learn the common sense behind relations and enable detection of previously unseen combinations of objects?

Mixture of Experts in Recommender Systems

Our area of interest is Visual Commonsense Reasoning with a focus on interpretability and explainability. Given a set of movie scenes and corresponding questions our task is to choose not only the correct answer but also provide a plausible explanation.

Learning the Spatial Common Sense of Relations

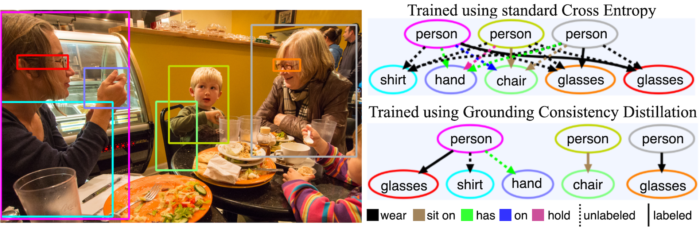

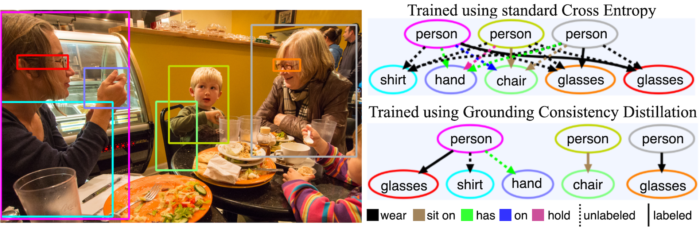

Current datasets of visual relationships showcase a strong effect of reporting bias, i.e. annotators having the tendency to label more some object pairs than others. For example, a person will be related to a chair only if he/she is sitting on it, or to a pair of glasses only when wearing them. This causes models to neglect visual information and unimaginatively predict only based on the semantics: every person will always sit on every chair, wear every shirt etc. (see figure on the left).

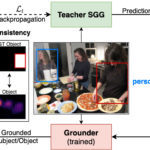

Methodology: Grounding Consistency

We combine VRD with its inverse problem, namely Grounding, and construct a semi-supervised closed-loop training scheme able to learn from unlabelled samples by enforcing consistency and capture the spatial common sense they express, mitigating the language bias.

Contributions

- New training method able to take advantage of unlabelled samples

- Our training scheme does not assume any prior knowledge of the dataset’s statistics

- State of the art SGG models able to better disambiguate scenes

- New evaluation metrics that capture performance on unlabelled samples

Markos Diomataris

Project Supervision

Nikolaos Gkanatsios

(External Collaborator)

Explainable Visual Commonsense Reasoning

Given a set of movie scenes and corresponding questions our task is to choose not only the correct answer but also provide a plausible explanation. This task requires a combination of linguistic common sense (e.g. an office generally contains computers) as well as visual grounding capabilities (e.g. locating the computer in order to deduce where a scene takes place).

We are building model architectures that have an inherent interpretable architectural design. We are able to visualise where exactly a model “looks” in order to give a specific answer (see figure on the right). This enables us not only to understand why our models make certain predictions, but also to use those interpretability variables as part of our training objective.

Mary Parelli

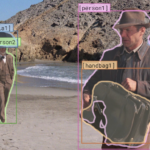

Recognising Unseen Relations using External Knowledge

We employ a GNN model enhanced with Multi-head Attention and train it in a self-supervised way using contrastive losses. Our goal is to create meaningful context representations by learning different properties from each neighbourhood of the knowledge graph conditioned on each predicate. For instance, the “person’s” representation should be similar to the “elephant’s” given the context of wearing glasses since they both have a nose. The inclusion of the attention mechanism along with the message passing process of the GNN network aims to successfully model those properties. Creating meaningful relational-based embeddings that capture commonsense knowledge will lead the way to enable the detection of unseen scenarios.

Manos Zaranis

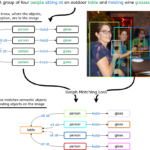

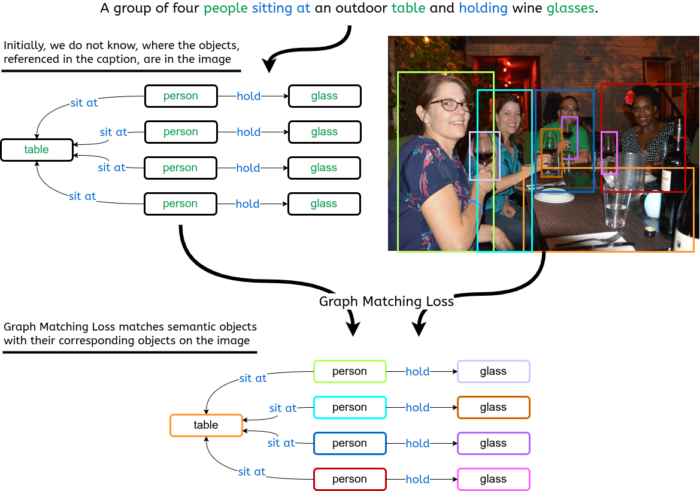

Weakly Supervised Visual Relationship Detection

Our approach discards annotated graphs and just uses image captions, which are inherently salient, to extract semantic scene graphs and weakly train our models using a Graph Matching Loss function. The benefits of this approach are twofold. First, we are able to scale our training data to the available large scale image-text datasets. Second, our models learn to give priority to the image relationships that would be most meaningful to a human.

*Thesis project co-supervised by Prof. Petros Maragos @NTUA

Alexandros Benetatos

Publications

A. Benetatos, M. Diomataris, V. Pitsikalis, P. Maragos, “Generating Salient Scene Graphs with Weak Language Supervision”, EUSIPCO, 2023

M. Parelli, D. Mallis, M. Diomataris, V. Pitsikalis, “Interpretable Visual Question Answering via Reasoning Supervision“, International Conference of Image Processing (ICIP), 2023

M. Diomataris, N. Gkanatsios, V. Pitsikalis, and P. Maragos, “Grounding Consistency : Distilling Spatial Common Sense for Precise Visual Relationship Detection”, In Proc. ICCV, 2021 PDF

N Gkanatsios, V Pitsikalis, P Maragos, “From Saturation to Zero-Shot Visual Relationship Detection Using Local Context”, In Proc. BMVC, 2020, PDF

N. Gkanatsios, V. Pitsikalis, P. Koutras, A. Zlatintsi, P. Maragos, “Deeply Supervised Multimodal Attentional Translation Embeddings for Visual Relationship Detection”, In Proc. ICIP, 2019 PDF

N. Gkanatsios, V. Pitsikalis, P. Koutras, A. Zlatintsi and P. Maragos, “Showcasing Deeply Supervised Multimodal Attentional Translation Embeddings: a Demo for Visual Relationship Detection”, In Proc. ICIP, 2019 Link

N Gkanatsios, V Pitsikalis, P Koutras, P Maragos, “Attention-Translation-Relation Network for Scalable Scene Graph Generation”, In Proc. ICCV Workshops, 2019, PDF